-

This site uses cookies. By continuing to use this site, you are agreeing to our use of cookies. Learn More.

GamersOnLinux

Apr

07

The city of Harran is in a devastating zombie apocalypse and there are small factions of survivors who can't escape. Join a friend in co-op and explore a massive city using parkor skills to traverse any terrain! Loot buildings, level up skills, help the locals and of course... slay zombies in all manner of goriness you can imagine! There are so many ways to kill mindless biters... Dying Light is a zombie sandbox.

Initially I tried the native Linux version...

Initially I tried the native Linux version...

Mar

16

Östertörn, Sweden is over run with robots! Not your run-of-the-mill Lost in Space robot, but dangerous animal-like killer mechanical robots with you as their prey! Explore an enormous island with towns, forests, tourist traps and of course... a lot of robots to slay!

Generation Zero is a co-op and/or singleplayer campaign with a lot of focus on stealth. You can run out into the open and start laying them down with bullets, but it isn't gonna last long....

Generation Zero is a co-op and/or singleplayer campaign with a lot of focus on stealth. You can run out into the open and start laying them down with bullets, but it isn't gonna last long....

Mar

01

Survive a zombie apocalypse on the island of Banoi! This band of friends hack and loot their way across a massive resort island crafting weapons, tossing molotov's and slaying varying types of minions. Dead Island was released in 2011 and still looks as beautiful and detailed as many AAA games released today! I still can't believe the amount of detail in every object, plant, weapon and animation!

Dead Island in 2024 still has a native Linux port available...

Dead Island in 2024 still has a native Linux port available...

Feb

09

Bioshock 2 is the second installment of the underwater euphoria franchise. Visit Rapture once again but 10 years later, this time as a Big Daddy! Adopt a LittleSister and save Adam to upgrade your skills but as always, you are not alone! Fight again all kinds of splicers, turrets and even a Big Sister. Learn to wield your skills along with your drill and guns! This ain't your first rodeo, so get out there and save Rapture!

I originally posted a...

I originally posted a...

Jan

29

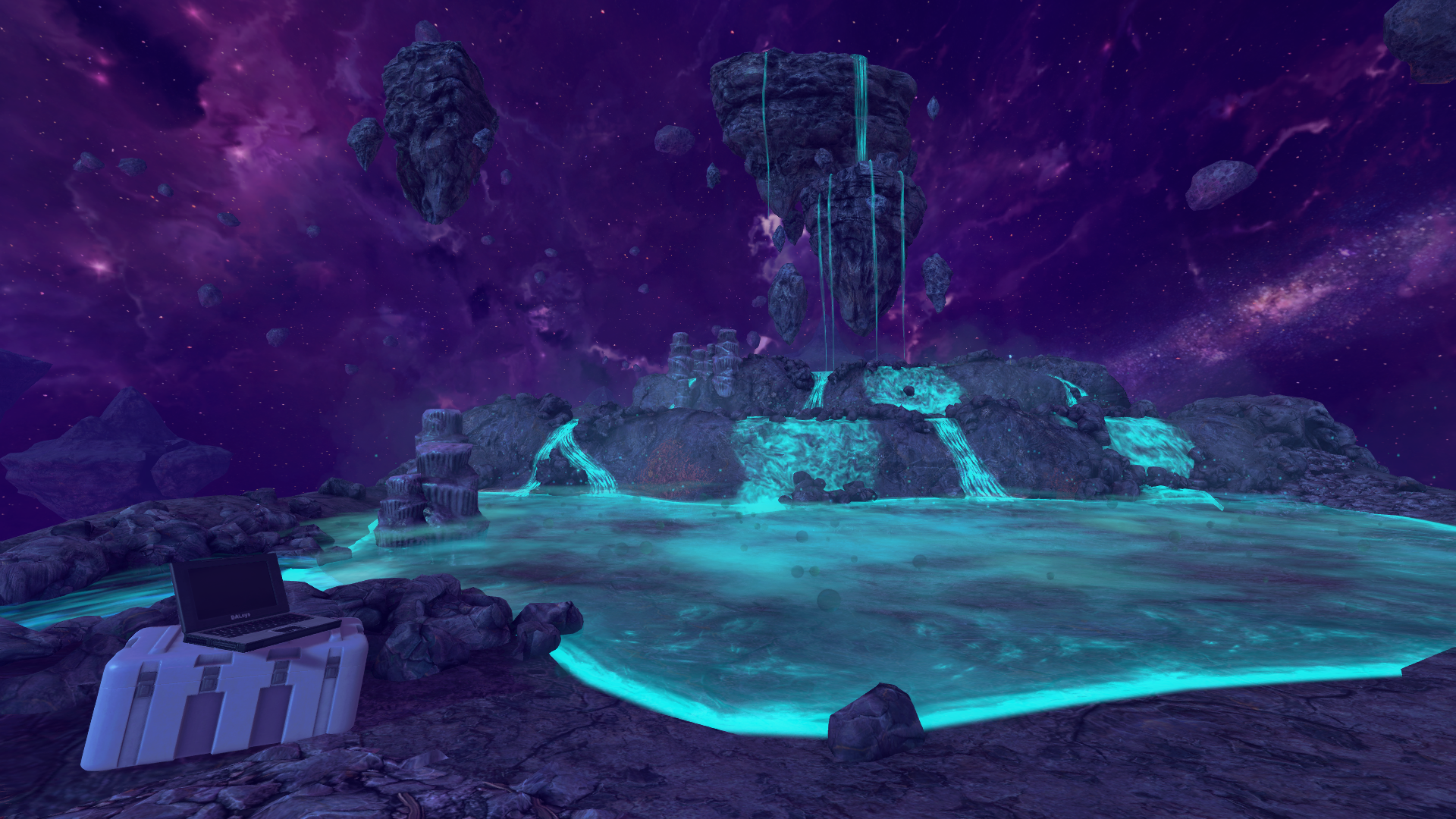

After playing the entire Black Mesa remake of Hal-Life 1, I was absolutely amazed at the quality in Zen levels. The beautiful organic alien world make of glowing vegetation and flying creatures. The details are amazing and the interactive puzzles are so creative! This whole game blew me away and I'll never play the original Half-Life again. This is what gaming is all about!

Here are some creative screenshots in PNG 1920x1080

...

...

Here are some creative screenshots in PNG 1920x1080

Jan

18

Enter the world of memory as Nilin where your emotions and memories are digitized and can be exploited! Will the world be a better place if we can erase all the bad memories? Nilin starts with no memory of who she is, but her brother hacked the system and allows her to escape. Explore the future with memory control where robots rule and the underworld is full of manic zombies.

Melee you way through hordes of zombies, soldiers, robots and mini-bosses using...

Melee you way through hordes of zombies, soldiers, robots and mini-bosses using...

Jan

06

One of my favorite ship interiors is in Borderlands 3!

Ever since Borderlands 1 the visuals, textures, graphics and shaders have improved incredibly.

I decided to load up the game and visit Sanctuary III for some wallpaper screenshots.

Each shot was taken with almost all settings on High and using the Photo Mode in the Esc menu.

I played around with focus and field-of-view for more dramatic shots.

Some are simply a wall with wallpaper-ish items.

Each screenshot is 1920x1080 PNG

Enjoy!...

Ever since Borderlands 1 the visuals, textures, graphics and shaders have improved incredibly.

I decided to load up the game and visit Sanctuary III for some wallpaper screenshots.

Each shot was taken with almost all settings on High and using the Photo Mode in the Esc menu.

I played around with focus and field-of-view for more dramatic shots.

Some are simply a wall with wallpaper-ish items.

Each screenshot is 1920x1080 PNG

Enjoy!...

Dec

27

Black Mesa is modder-built total conversion of Half-Life using the source engine. Anyone who has played a First Person Shooter on PC knows about Half-Life. It is one of the most original linear action sci-fi games ever made! Crowbar Collective has taken it upon themselves to create a reboot of Half-Life using more modern Source engine the entire game from beginning to end!

Black Mesa is so well done that I won't ever play the original Half-Life again!...

Black Mesa is so well done that I won't ever play the original Half-Life again!...

Page 1 of 101

XenPorta

© Jason Axelrod from 8WAYRUN.COM